Examining the Possibilities of Using Artificial Intelligence in the Counseling Setting: Part 1

Part 1: Promising Leads

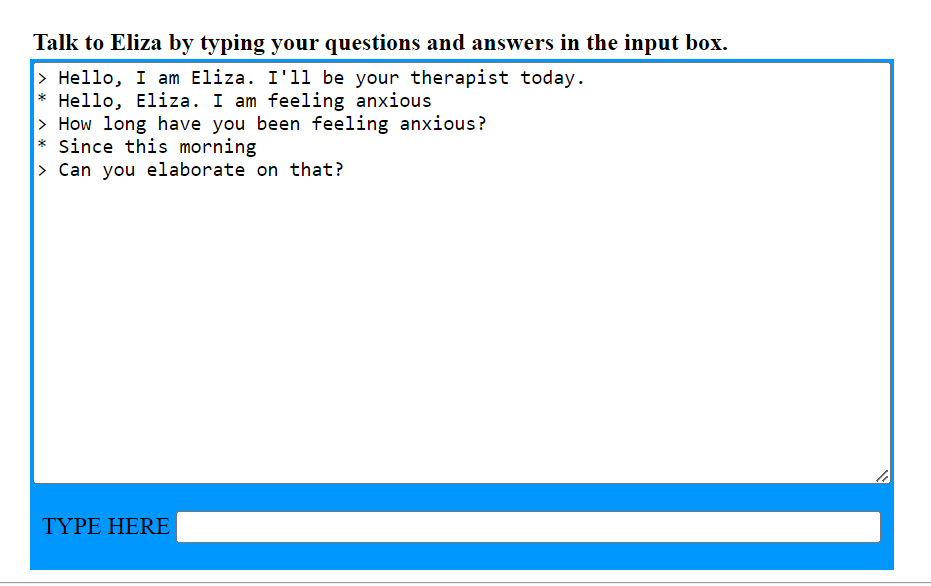

Since ChatGPT launched in November of 2022, internet searches for artificial intelligence have risen to the highest level ever recorded by Google. However, though interest in artificial intelligence appears to be rising, the technology has been studied and utilized since the 1950s. In fact, the ethics and applications of artificial intelligence in mental health treatment has been a point of discussion since 1966 when Joseph Weizenbaum, a computer scientist from MIT, created ELIZA, the first artificially intelligent therapist. ELIZA was programmed to ask questions and give responses in a Rogerian style of therapy. An example of an interaction between the author of this article and a modern ELIZA is included below.

Though Weizenbaum did not create ELIZA with the intention that it actually rendered psychotherapy, the technology’s ability to paraphrase and reflect responses back to users led many to engage in conversation and have actual emotional responses with ELIZA as if she were a human. Though more than 50 years have passed since ELIZA’s creation, the thought of incorporating artificial intelligence into the therapy setting still comes with many uncertainties. With psychological disorders increasing by 13% worldwide over the last decade and the shortage of mental healthcare providers worsening, researchers are contemplating how much of a role artificial intelligence can and should play in the treatment of mental health.

One piece of artificial intelligence technology that has shown promise in regards to efficacy is WoeBot, an app created by clinical psychologist Dr. Alison Darcy. WoeBot uses machine learning to engage users in conversations and teach cognitive behavioral therapy strategies. For example, WoeBot will reference previous conversations in check ins and engage users in interventions, such as thought challenging and coping strategy use, depending on the symptoms being reported. If a user is reporting feeling anxious, WoeBot may encourage them to examine the thoughts behind the anxiety or suggest a deep breathing exercise.

Studies have examined the efficacy of WoeBot and found evidence to suggest it may be helpful in the treatment of multiple mental health conditions. In 2020, a team of researchers from Stanford University and WoeBot studied adapting WoeBot for substance abuse treatment. After participants utilized WoeBot for 8 weeks, researchers found a significant decrease in reported substance use and cravings, as well as an increase in reported confidence in resisting urges to use drugs or alcohol. A previous study also found a significant reduction in reported depressive symptoms among participants using WoeBot compared to the control group.

Emerging research is also examining the effectiveness of other artificial intelligence applications. A study examining the effectiveness of the Acceptance and Commitment Therapy-oriented app, Kai, found that participants who engaged more frequently with the app showed greater improvement in overall psychological well being, as measured by the World Health Organization Well-Being Index. Research has also indicated promising findings in the exploration of using artificial intelligence to aid in diagnosing conditions such as schizophrenia, mood disorders, and suicide risk.

Though the referenced studies suggest possible clinical utility of artificial intelligence technology, there are still many ethical considerations and gaps in research that may limit the applicability of artificial intelligence in the counseling setting. In the next two articles, we will explore these ethical and research considerations, as well as how black box decision-making and data inequity can lead to biased results.